DevOps Interview

Read the Interview with Mr. Mahesh Chandra about DevOps and its usage.

A data pipeline is a set of tools and processes used to automate the

movement and transformation of data between a source system and a

target repository.

Source systems often have different methods of processing and storing

data than target systems. Therefore, data pipeline software automates the

process of extracting data from many disparate source systems,

transforming, combining and validating that data, and loading it into the

target repository.

Building data pipelines breaks down data silos and creates 360degree view

of the business. Business can then apply BI and analytics tools to create

data visualisations and dashboards to derive and share actionable insights

from the data.

Batch data pipeline: A batch data pipeline periodically transfers bulk data from source to target. For example, the pipeline can run once every twelve hours. Batch pipeline can be organised to run at a specific time daily when there’s low system traffic.

A streaming data pipeline continually flows data from source to target while translating the data into a target format in real-time. This is suitable for data that requires continuous updating. For example, to transfer data collected from a sensor tracking traffic.

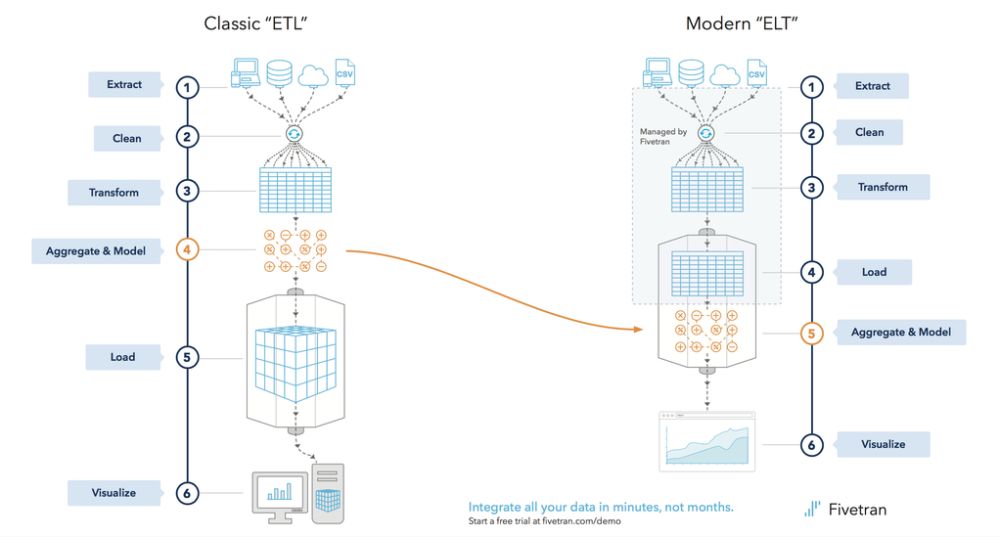

ETL (extract, transform and load) is a data integration process that includes extracting raw data from various data sources, transforming the data on a separate data processing server before loading into the target system such as data warehouse, data mart or a database for analysis or other purposes.

ELT stands for “extract, load, and transform” is the processes a data pipeline uses to replicate data from a source system into a target system

such as a cloud data warehouse.

ELT is a modern variation of the older process of extract, transform, and

load (ETL), in which transformations take place before the data is loaded.

As mentioned above ETL process requires a separate processing engines

for running transformations prior to loading data into a target system. ELT,

on the other hand uses the processing engines in the target system to

transform data. This removal of an intermediate step helps in streamlining

the data loading process.

Since ETL process transforms data prior to the loading stage,

Read the Interview with Mr. Mahesh Chandra about DevOps and its usage.

As organisation are adopting data-driven approach to grow and create

value to their business, but the challenges in the traditional methods to get required for analysis takes days and months for business to use the data…

We add value in each step of your business process.

Services